If you work with Kubernetes, you know this pain: estimating the cost of a specific Kubernetes workload is really hard. We talked about why controlling cloud costs is so difficult here.

But there are a few things you can do to ease this pain and arrive at a more accurate estimation of your workload costs.

Let’s go over the strategies to help you control Kubernetes cost and improve cluster management.

Problem 1: Kubernetes can’t stretch one pod across multiple nodes

Imagine that you have a node that costs 50$ per month. It has 7 GB of memory and offers you 7 CPUs. Then you have a workload that requires 3 GB of memory and 3 CPUs in order to run.

You can fit up to 2 pods worth of that workload in a single node.

But what if you want to add a third pod of the same kind? Get ready to pay another $50/month for it. There’s not enough space left for this pod in your node.

On top of that, you also end up with a little bit of wasted space on your first node. You’ve got 1 GB of memory left and 1 CPU. Your second node is under-utilized as well since you’re using less than half of its resources.

This is the primary issue here: it’s impossible to split up one pod across multiple nodes in Kubernetes. Just like you can’t split a shipping container into two parts and then place them on two different ships.

So if you can’t fit the entire number of pods into a single node, you’re going to end up with some capacity that is going to waste. This is one of the major reasons behind cloud waste.

Related issue: resource asymmetry

What if your workload is very CPU-intensive and requires only 2 GB of memory but 4 CPUs to run?

In this case, you can only fit one pod worth of this workload in the same sort of node. As you can see, plenty of unused capacity is going to waste here.

How to deal with this?

Rightsizing your nodes is the best move here. For example, if you used a node with 3 GB of memory and 3 CPUs, your Kubernetes workload that requires 1 GB of memory and 1 CPU in order to run would fit there perfectly. And you wouldn’t have to deal with any waste.

And when it comes to memory- or CPU-intensive workloads, many cloud providers offer nodes that handle this Kubernetes cost problem effectively.

Problem 2: Optimistic estimation

Imagine that you have one small node of 4 CPUs and 4 GB that costs you $72 per month. Estimating the total cost should be easy, right?

If you pay $72 for both memory and CPU, it means that $36 goes to memory and $36 to CPU. And if you divide that by 4, you get the price per 1 GB and 1 CPU – that’s $9 each.

Imagine that your workload requires 2 GB of memory and 2 CPU. How much will it cost per pod? All it takes is multiplying $9 by the number of GB and CPU your workload requires. Here’s how you calculate it:

$9/CPU * 2CPUs + $9/GB * 2GB = $36

The total cost amounts to $36. This makes sense because you can fit two such workloads on a $72 node.

Now, let’s imagine now that your second workload is CPU-intensive and requires 1 GB and 3 CPUs. If you multiply it all, you still get $36. But you can only fit one such workload in your node. So in reality, this workload will be costing you $72 per month. That’s why cost estimation for Kubernetes workloads is so tricky.

How to deal with this?

You can use a different method of cost estimation. Take the largest of the requirements among CPU or memory, and assume that the smaller resource is as big in the cost calculation.

This method helps to understand the costs of memory- or CPU-intensive workloads that usually cause companies for their extra capacities to go to waste.

To calculate it:

- Select the largest resource requirement (in this case – 3 CPUs)

- Assume the other resource is the same size (i.e. 3 GB, not 1 GB of memory)

- Calculate the price estimate the same way as before

The calculation above is (3 CPU + 3 GB) * $9 numerically which equals $54. Much closer to the $72 target. It also hints at how much-unused node capacity is left in the node.

This conservative estimation method prepares you for the worst-case scenario as it gets to Kubernetes bin-packing workloads onto your node.

The conservative and optimistic method will often end up at similar estimates. The estimations will diverge for CPU- or memory-intensive workloads. To estimate them properly, your method needs to assume that there will be some waste (i.e. be the conservative one).

When should you use this method?

It depends. For example, if you’ve already invested time into optimizing your instance types or your nodes are much larger than your average workload, you can get away with the optimistic method and count on k8s to pack them cost-efficiently. In any other case, it’s smarter to stick with the conservative method.

Problem 3: Varied resource usage

Another problem you should be aware of that impacts cost estimation is that a Kubernetes workload doesn’t always use a fixed amount of memory and CPU. In fact, resource usage can vary from one second to another. Pods move between the nodes to respond to these fluctuations or get killed.

To help developers deal with this issue, Kubernetes configuration offers two fields for managing Kubernetes resources:

- Requests – this field sets the minimum resource usage of a workload.

- Limits – they set the maximum resource usage of the workload. For example, if a pod for the workload attempts to use more than the set amount, the pod will get killed, and a new one will start.

The actual resource usage of a workload usually lands between minimum and maximum, fluctuating from second to second.

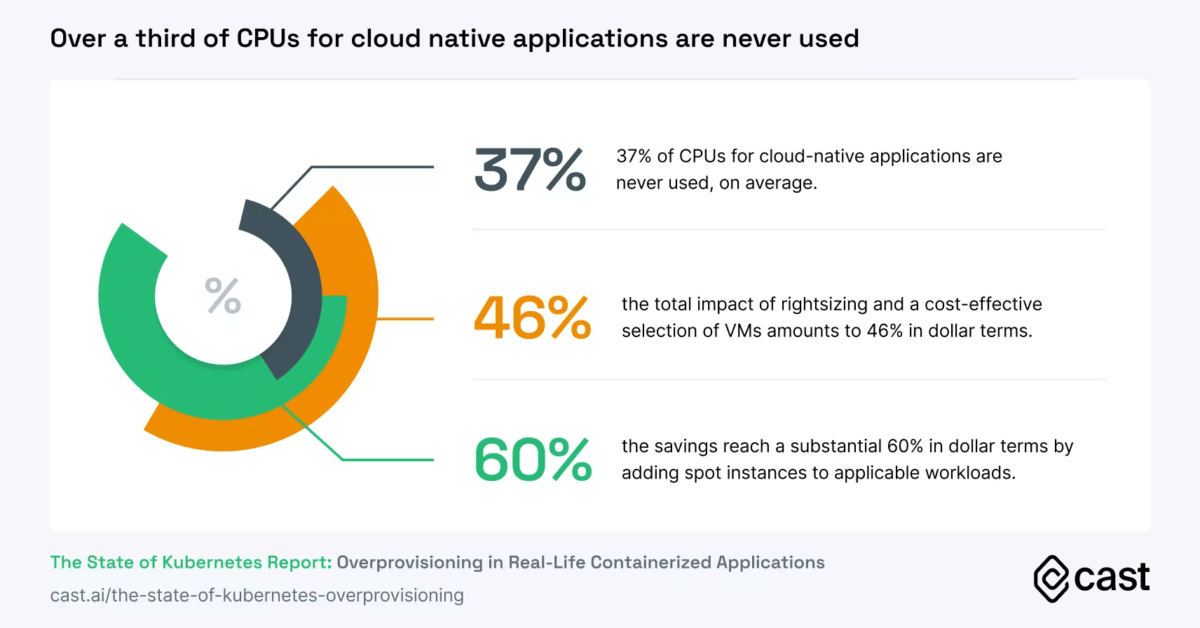

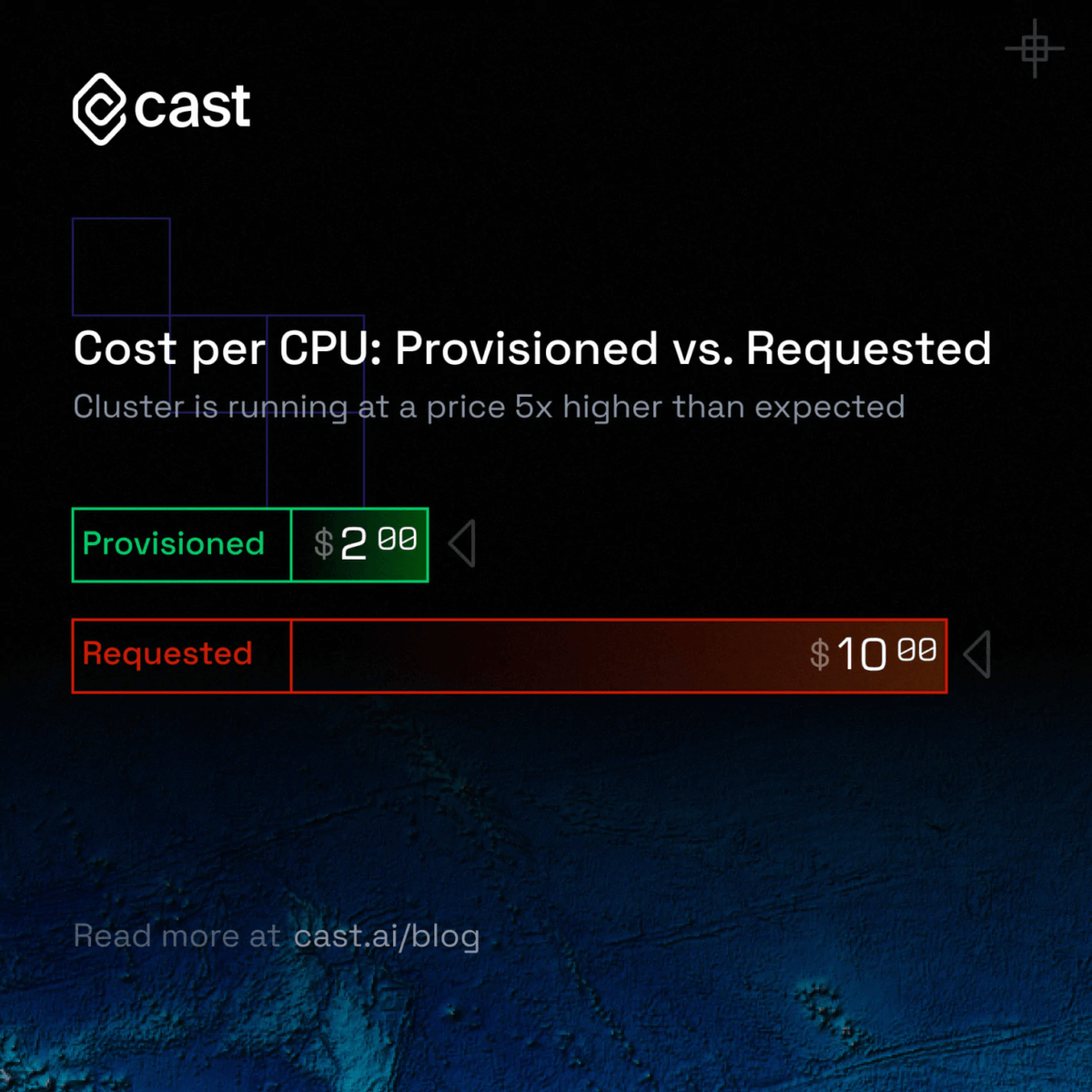

When estimating your Kubernetes cost, consider how many resources your workload requests vs. how much is provisioned for it. You might find a gap between these two figures representing another layer of wasted cloud capacity.

How to deal with this?

To estimate the bottom-line workload cost, consider the fluctuating number, and calculate its average over time. You can also look at requests and limits to come up with a range of possibilities – or just take an average of the two.

Problem 4: Not setting requests and limits

It’s essential that you feed Kubernetes with information about requests and limits for every single one of your workloads. Otherwise, you risk that one of your workloads ends up gobbling up all of the resources available in one node if no requests or limits have been set.

This would prevent other workloads with set requests and limits to get all the Kubernetes resources they need and reduce their performance.

Setting requests and limits is tricky. While some teams don’t set them at all, others set too high values during initial testing and then correct them. But these requests and limits are really important for accurate cost estimation.

How to deal with this?

Make sure to set requests and limits for all of your Kubernetes workloads.

Understanding the costs of your containerized applications can become challenging when they all run together in Kubernetes. By using the strategies we mentioned above, you can ensure that your Kubernetes deployments run efficiently, and your organization doesn’t suffer from massive cloud waste.

Can you automate Kubernetes cost management?

Luckily, there are third-party solutions that automate a lot of the effort that goes into managing, forecasting, and reporting on Kubernetes costs.

To see how it works, get started with CAST AI’s free cost monitoring module. All it takes is connecting your cluster and you can analyze your Kubernetes costs in real time, never going over budget again.

CAST AI clients save an average of 63% on their Kubernetes bills

Connect your cluster and see your costs in 5 min, no credit card required.

FAQ

Kubernetes is an open-source technology, but that doesn’t mean it’s free to use. The operational expenses will depend on how you deploy it. You can choose to either self-host Kubernetes or used a managed Kubernetes solution.

If you choose a self-hosted Kubernetes solution, you will need to budget not just for the nodes required to run your workloads, but also for the nodes required to operate your control plane and the personnel to manage the operation. You’ll need to manage nodes that house your control plane, as well as separate worker nodes that host your application workloads.

In a managed Kubernetes scenario, you don’t have to worry about creating or managing your Kubernetes clusters, or about creating the control plane or installing its components like etcd, kube-apiserver, or kube-scheduler. AWS, Google Cloud, and Microsoft Azure are three largest managed Kubernetes providers offering Google Kubernetes Engine (GKE), Elastic Kubernetes Service (EKS), and Azure Kubernetes Service (AKS). The managed control plane of all of these services is priced similarly at $0.10/hour.

Although Kubernetes is an open-source technology, it is not exactly free to use. The cost of running a Kubernetes cluster depend on how you deploy it: either through self-hosting or using a managed Kubernetes service like Google Kubernetes Engine (GKE), Elastic Kubernetes Service (EKS), and Azure Kubernetes Service (AKS).

Because Kubernetes is an open source solution, there is no fee and, for the time being, no further costs involved.

To effectively manage a Kubernetes cluster, you need to employ DevOps and SRE experts to build an operations team. The main task of this team is balancing pod and node deployments with performance and availability requirements – which involves a lot of configuration, management, and maintenance tasks.

The cost will depend on how you choose to deploy Kubernetes: using self-hosting or running it in a managed Kubernetes solution.

If you pick a self-hosted Kubernetes solution, you must budget not only for the nodes needed to run your workloads, but also for the nodes needed to run your control plane and the employees needed to oversee the operation. You will need to manage control plane nodes as well as worker nodes that host your application workloads.

In managed Kubernetes, you don’t have to worry about constructing or managing Kubernetes clusters, or about creating the control plane or installing its components – so some operations costs are lower.

Leave a reply